Microsoft has introduced a new policy-controlled option that allows designated users in specialized roles, such as legal, investigative, or moderation teams, to reason over sensitive content in Copilot Chat while maintaining organizational safeguards. This new policy is disabled by default.

Timeline

The rollout was completed in September 2025.

Impact for your organization

Administrators can enable a new policy that permits selected users to interact with sensitive or potentially harmful content in Copilot Chat under controlled conditions. By default, the feature is turned off and has no impact until the policy is explicitly assigned to target users.

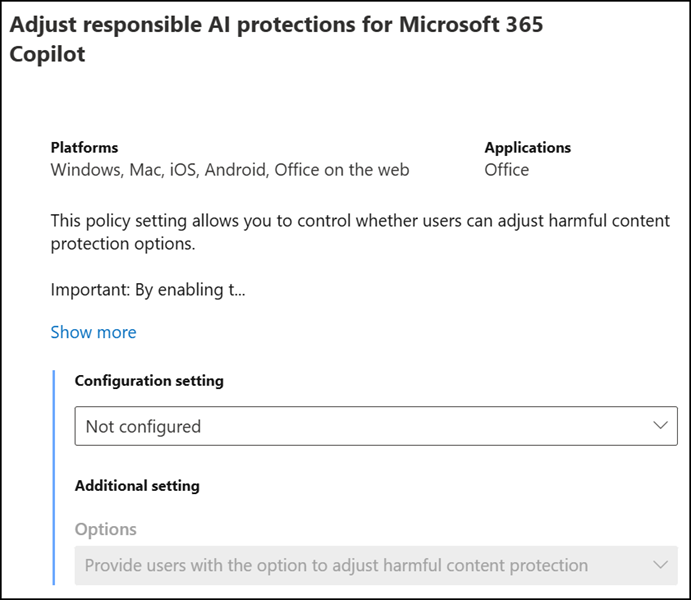

The Office Cloud Policy service now includes a new policy: Adjust responsible AI protections for Microsoft 365 Copilot

Adjust responsible AI protections for Microsoft 365 Copilot

This policy setting allows you to control whether users can adjust harmful content protection options.

Important:

By enabling this option, your organization will open up Microsoft 365 Copilot Chat’s broad capabilities for use in specialized roles and industries where exposure to content harms is necessary (e.g. content moderation, legal review, law enforcement, etc).

This setting allows responses to your users to include sexual content, violence, hate, and self-harm. Disabling Harmful Content Protection may result in system responses that contain content that violates the Microsoft Generative AI Services Code of Conduct. This policy only applies to Microsoft 365 Copilot Chat.

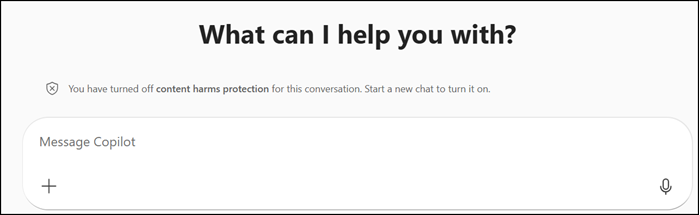

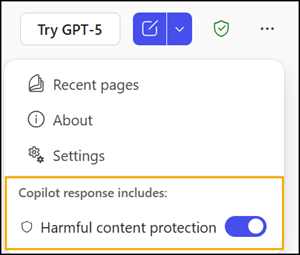

If this policy is configured, users will find a new content protection option in Microsoft 365 Copilot Chat. Harmful content protection is enabled by default in all new chats and must be explicitly turned off by the user. This differs from users without the policy, who cannot disable harmful content protection.

Copilot Chat also includes a visual note when harmful content protection is turned off.