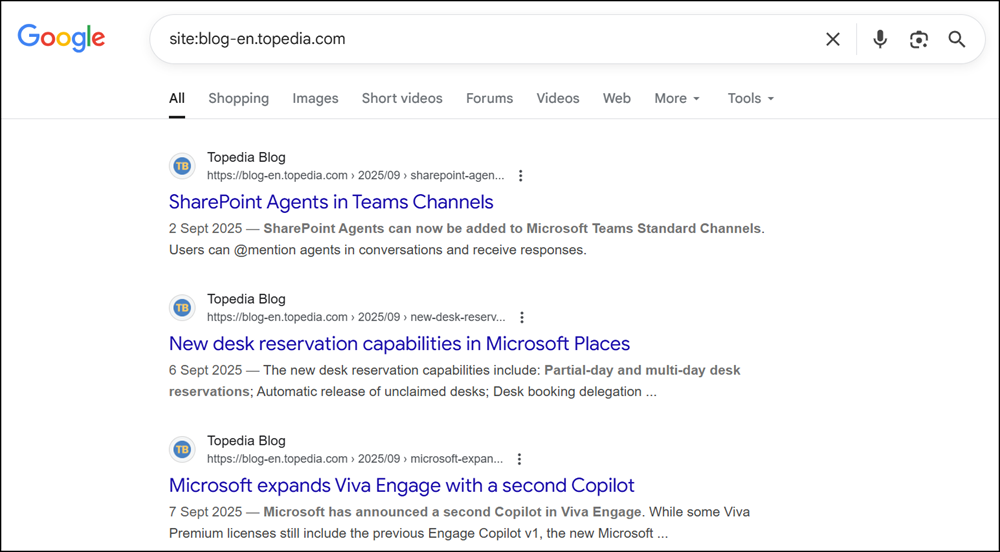

Two weeks ago, I noticed that the Google Crawl Bot was visiting my blog, but it wasn’t actually crawling my pages.

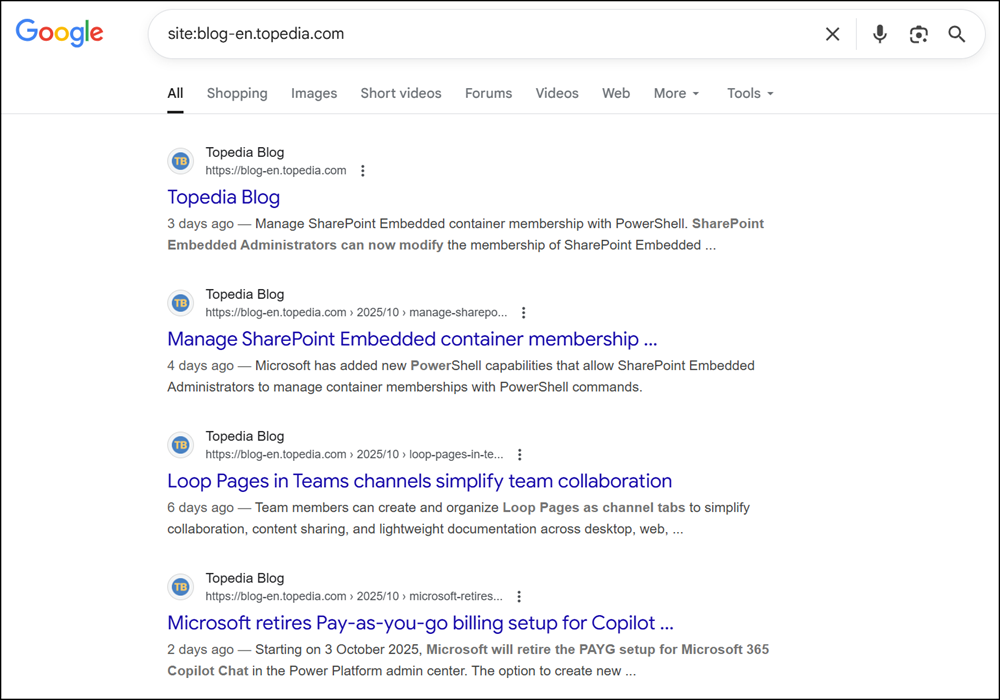

Checking the search results confirmed it: Google hadn’t crawled any of my posts since 8 September due to an error.

I have no issues with the Bing Bot, ChatGPT Bot, or other AI crawlers. I’ve never had more problems with the Bing Bot since my issues with Bing in April 2023.

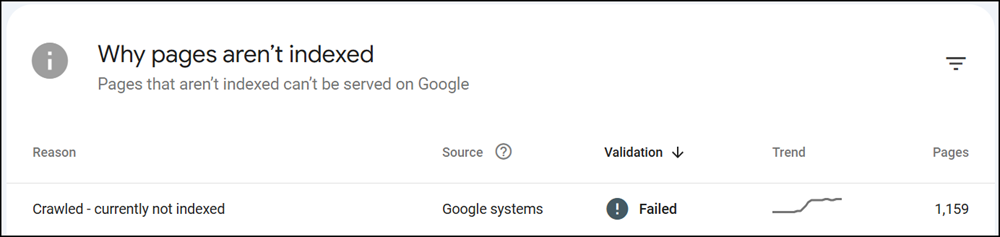

The first step was to check Google Webmasters and review the page statuses.

The majority of my pages showed crawl issues. New pages weren’t indexed, and existing pages weren’t updated.

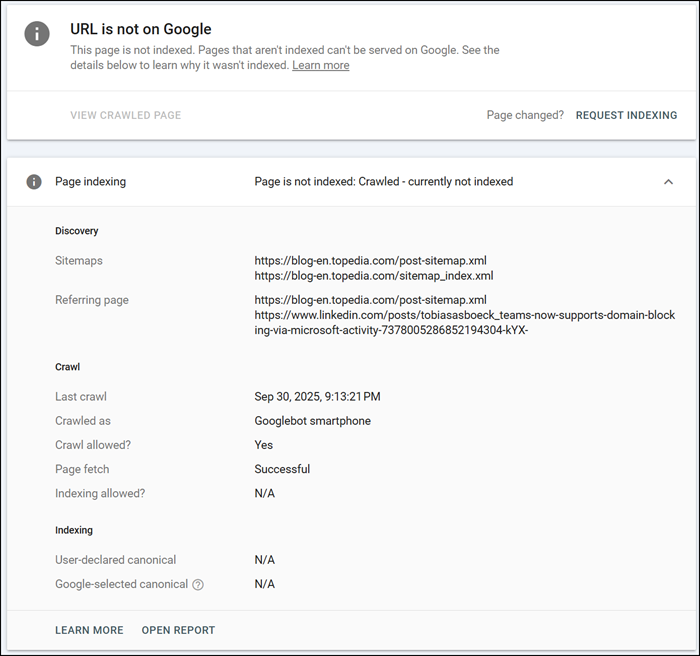

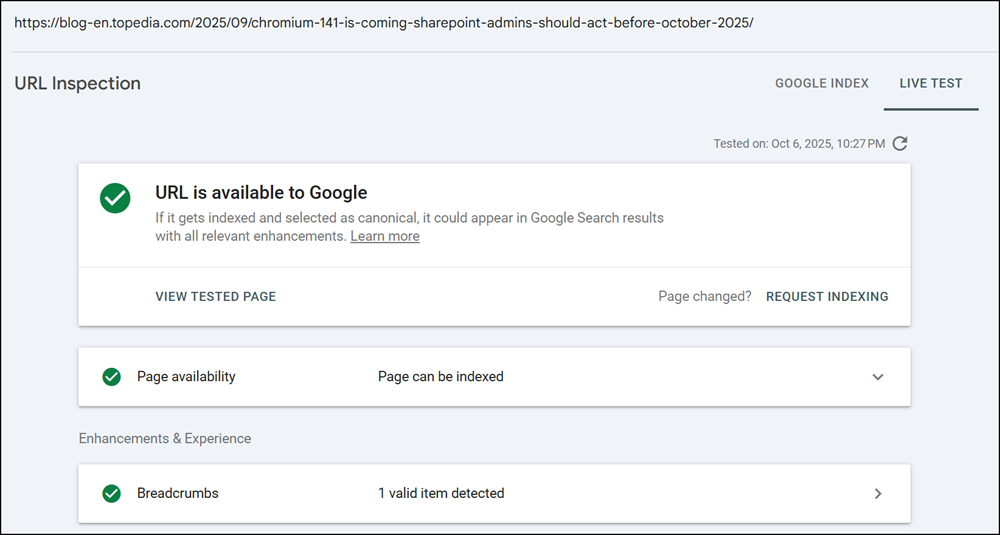

Next, I checked one of my popular posts in Google Webmasters.

All new posts published since 8 September were missing from Google’s index.

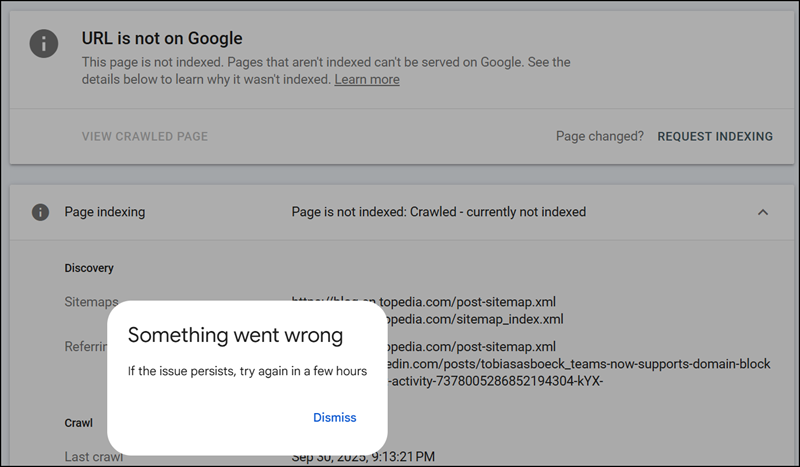

Running a live URL test returned an error > the same issue occurred for all 1,159 URLs.

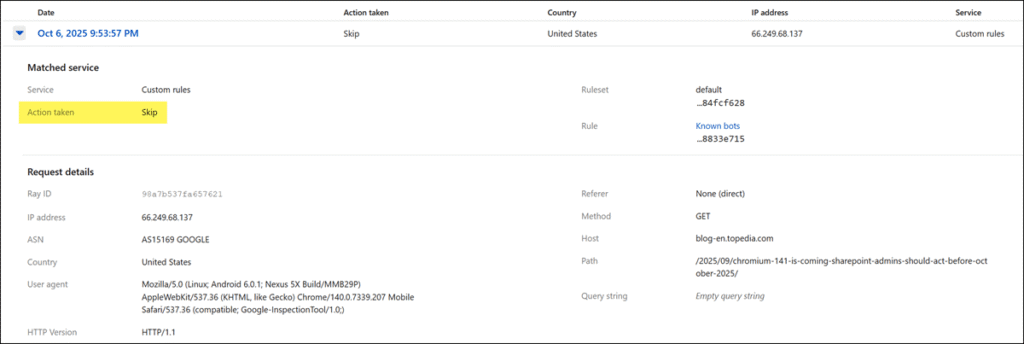

I then checked Cloudflare to see if it was blocking Googlebot. It wasn’t, Cloudflare follows my rule to skip known bots.

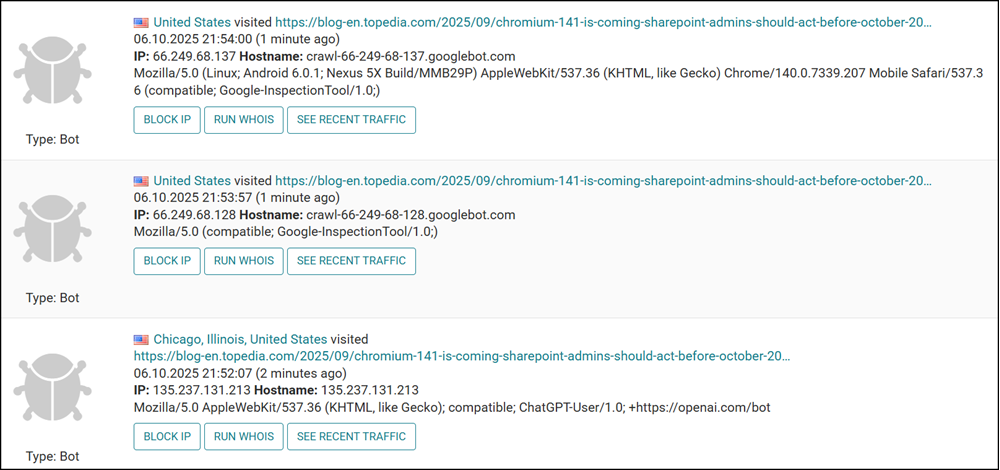

Next, I checked whether my second security layer was blocking the bot. Again, no issue — both Google and ChatGPT bots were able to visit successfully. Notably, ChatGPT never had a problem; the issues were only with Googlebot.

So, I opened a support ticket with Google Webmasters to get guidance. I also wanted to see how their support compared, especially after my terrible experience with Bing Webmaster support in 2023, which never responded.

Google Webmasters support informed me that their bot couldn’t render my pages because it was receiving a 5xx error.

After our investigation we have noticed that Googlebot is having trouble rendering the pages.

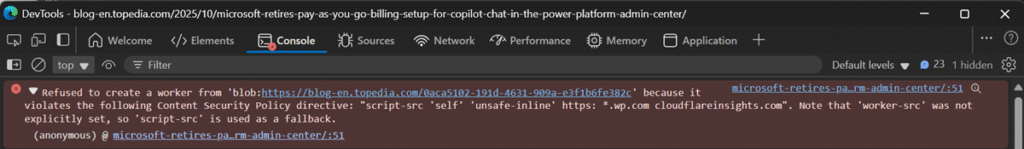

I then noticed a Content Security Policy (CSP) issue in my browser’s developer tools while inspecting the site.

I asked ChatGPT for help to see if the Googlebot’s rendering problems were related to the CSP issue. The answer was straightforward.

That Content Security Policy (CSP) error is the reason Googlebot (and Google’s rendering engine) can’t render your page correctly.

If Googlebot’s rendering engine can’t execute a worker or essential JS, the page stops midway. That’s why your Live Test URL failed — the page wouldn’t render fully due to the blocked worker. Once CSP allows it, rendering completes normally and indexing stabilizes.

Eventually, Google has updated or hardened its mobile crawler. My CSP issue wasn’t new in September, but a change caused it to fail starting from September. I updated my CSP to include the missing worker source.

After publishing the updated CSP, I reran the live URL test, and Google instantly marked the page as “available.”

As a final step, I took the opportunity to further improve my CSP configuration.

I requested a validation update for all affected pages, which can take some time. The good news is that Googlebot has already begun crawling some of the missing pages.

Conclusion:

If you’re also experiencing issues with Googlebot, make sure to check your site’s Content Security Policy. A restrictive or misconfigured CSP can easily block Google’s crawler from rendering and indexing your pages.